[ad_1]

Macworld

You might have seen headlines this week about the Samsung Galaxy S23 Ultra taking so-called “fake” moon pictures. Ever since the S20 Ultra, Samsung has had a feature called Space Zoom that marries its 10X optical zoom with massive digital zoom to reach a combined 100X zoom. In marketing shots, Samsung has shown its phone taking near-crystal clear pictures of the moon, and users have done the same on a clear night.

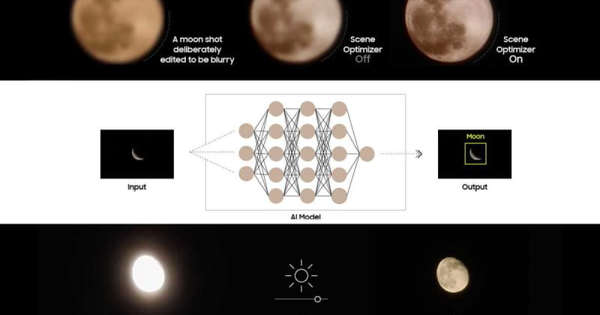

But a Redditor has proven that Samsung’s incredible Space Zoom is using a bit of trickery. It turns out that when taking pictures of the moon, Samsung’s AI-based Scene Optimizer does a whole lot of heavy lifting to make it look like the moon was photographed with a high-resolution telescope rather than a smartphone. So when someone takes a shot of the moon—in the sky or on a computer screen as in the Reddit post—Samsung’s computational engine takes over and clears up the craters and contours that the camera had missed.

In a follow-up post, they prove beyond much doubt that Samsung is indeed adding “moon” imagery to photos to make the shot clearer. As they explain, “The computer vision module/AI recognizes the moon, you take the picture, and at this point, a neural network trained on countless moon images fills in the details that were not available optically.” That’s a bit more “fake” than Samsung lets on, but it’s still very much to be expected.

Even without the investigative work, It should be fairly obvious that the S23 can’t naturally take clear shots of the moon. While Samsung says Space Zoomed shots using the S23 Ultra are “capable of capturing images from an incredible 330 feet away,” the moon is nearly 234,000 miles away or approximately 1,261,392,000 feet away. It’s also a quarter the size of the earth. Smartphones have no problem taking clear photos of skyscrapers that are more than 330 feet away, after all.

Of course, the moon’s distance doesn’t tell the whole story. The moon is essentially a light source set against a dark background, so the camera needs a bit of help to capture a clear image. Here’s how Samsung explains it: “When you’re taking a photo of the moon, your Galaxy device’s camera system will harness this deep learning-based AI technology, as well as multi-frame processing in order to further enhance details. Read on to learn more about the multiple steps, processes, and technologies that go into delivering high-quality images of the moon.”

It’s not all that different from features like Portrait Mode, Portrait Lighting, Night Mode, Magic Eraser, or Face Unblur. It’s all using computational awareness to add, adjust, and edit things that aren’t there. In the case of the moon, it’s easy for Samsung’s AI to make it seem like the phone is taking incredible pictures because Samsung’s AI knows what the moon looks like. It’s the same reason why the sky sometimes looks too blue or the grass too green. The photo engine is applying what it knows to what it sees to mimic a higher-end camera and compensate for normal smartphone shortcomings.

The difference here is that, while it’s common for photo-taking algorithms to segment an image into parts and apply different adjustments and exposure controls to them, Samsung is also using a limited form of AI image generation on the moon blend in details that were never in the camera data to begin with–but you wouldn’t know it, because the moon’s details always look the same when viewed from Earth.

© Mac World

Samsung says the S23 Ultra’s camera uses Scene Optimizer’s “deep-learning-based AI detail enhancement engine to effectively eliminate remaining noise and enhance the image details even further.”

Samsung

What will Apple do?

Apple is heavily rumored to add a periscope zoom lens to the iPhone 15 Ultra for the first time this year, and this controversy will no doubt weigh into how it trains its AI. But you can be assured that the computational engine will do a fair amount of heavy lifting behind the scenes as it does now.

That’s what makes smartphone cameras so great. Unlike point-and-shoot cameras, our smartphones have powerful brains that can help us take better photos and help bad photos look better. It can make nighttime photos seem like they were taken with good lighting and simulate the bokeh effect of a camera with an ultra-fast aperture.

And it’s what will let Apple get incredible results from 20X or 30X zoom from a 6X optical camera. Since Apple has thus far steered clear of astrophotography, I doubt it will go as far as sampling higher-resolution moon photos to help the iPhone 15 take clearer shots, but you can be sure that its Photonic Engine will be hard at work cleaning up edges, preserving details, and boosting the capabilities of the telephoto camera. And based on what we get in the iPhone 14 Pro, the results will surely be spectacular.

Whether it’s Samsung or Apple, computational photography has enabled some of the biggest breakthroughs over the past several years and we’ve only just scratched the surface of what it can do. None of it is actually real. And if it was, we’d all be a lot less impressed with the photos we take with our smartphones.

[ad_2]